AI Moderation Tools Being Adopted Across Social Platforms

AI Moderation Tools Being Adopted Across Social Platforms

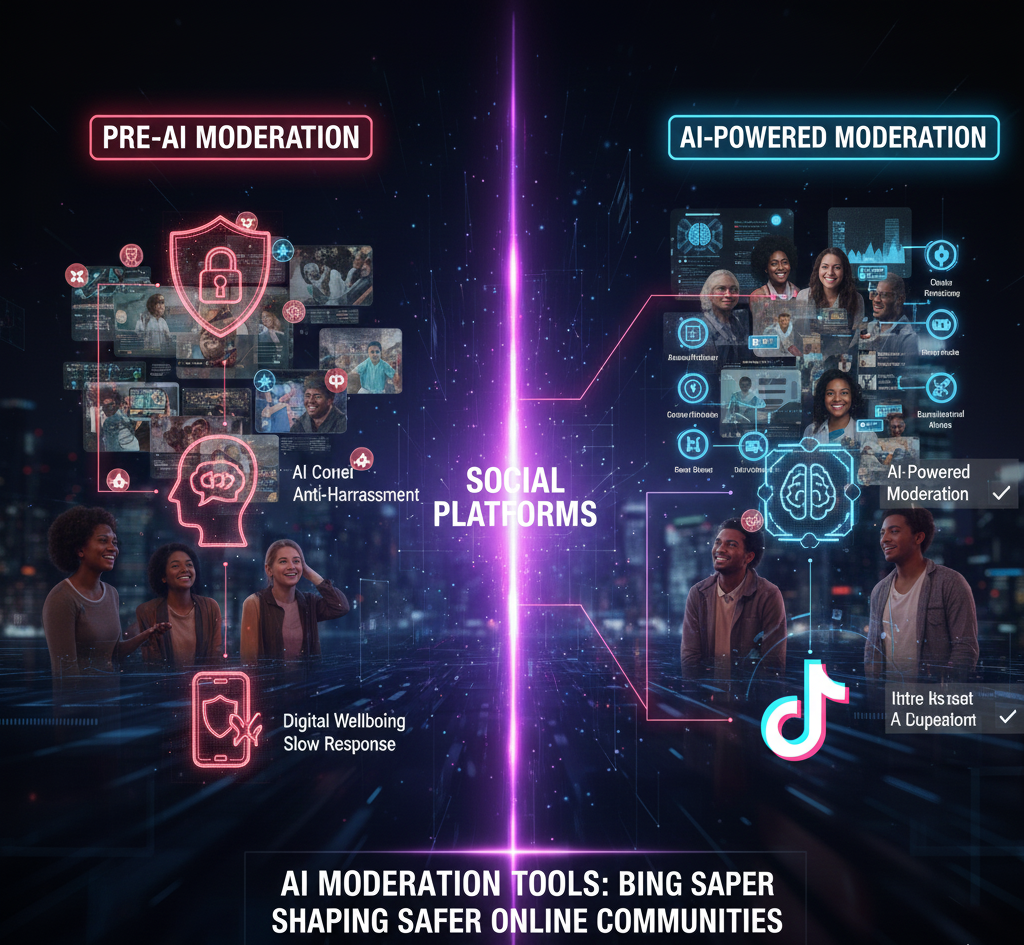

In the year 2025, social media platforms are increasingly making use of artificial intelligence moderation techniques in order to maintain online communities that are healthier and safer. Due to the tremendous growth of user-generated material, automated technologies are being used to identify dangerous content, decrease harassment, and more effectively enforce community norms than ever before.

For What Reasons Is the Moderation of Artificial Intelligence Becoming Increasingly Important?

Due to the size of contemporary social media networks, manual moderation is very hard to do. Because of the millions of posts, comments, videos, and photographs that are submitted on a regular basis, it is difficult to detect hazardous or improper information rapidly. Artificial intelligence moderation technologies provide a method of automatically scanning and flagging content that is in violation of standards. This includes anything that constitutes hate speech, harassment, disinformation, explicit material, and self-harm content. These methods assist platforms in maintaining safer digital environments by screening out harmful information before it has a chance to become widespread.

A description of the way in which artificial intelligence moderation functions

In order to identify infractions, artificial intelligence moderation technologies use cutting-edge machine learning and natural language processing. Analysis of potentially damaging terms, context, and emotion is performed on material that is based on text. Pictures and videos are checked for any graphic sexual content, depictions of violence, or any other kind of prohibited content. In order to make sure that platforms are able to react to new risks in real-time, some algorithms are now able to recognize subtle kinds of manipulation, such as deepfake media.

In addition to using automatic detection, artificial intelligence (AI) systems often emphasize the need of human inspection for certain material. By incorporating speed and scalability into a hybrid technique that also utilizes nuanced judgment, this method is able to decrease the number of false positives and enable moderators to concentrate on the more complicated instances.

Applications on Different Platforms

Artificial intelligence moderation is being implemented in a variety of ways by a variety of different social media sites. For example, many platforms are using artificial intelligence in order to prevent violent or explicit material from being accessible to users before it is posted. In contrast, other platforms are automatically moderating comments on postings with a significant volume of traffic in order to avoid harassment. Artificial intelligence is also being used to identify and flag potentially false posts for evaluation before they are disseminated on a large scale. This helps to identify disinformation.

By contributing to the reduction of harmful interactions while yet maintaining the user experience, these solutions are being more and more included into video sharing, chat, and live-streaming platforms. Artificial intelligence moderation increases the safety of both authors and general users by proactively spotting potential hazards.

Advantages for both Users and Communities alike

By generating surroundings that are more secure and lowering the amount of time spent consuming abusive or dangerous information, artificial intelligence moderation provides advantages to consumers. For artists, it helps safeguard their communities from abuse and spam, boosting healthy participation. When platforms consistently enforce rules and principles, communities reap the benefits, which in turn fosters trust and promotes long-term development.

In addition, artificial intelligence moderation has the potential to serve as a prophylactic strategy. Platforms are able to lower the risk of escalation when they identify possible breaches early on, which might include incidents of online bullying or organized harassment campaigns.

Things to Take Into Account and Potential Problems

While artificial intelligence moderation does have its benefits, it is not without its difficulties. Due to the fact that automated algorithms are not infallible, there are occasions when they misread context, which may result in information being improperly detected or deleted. The identification of sarcasm may be made harder due to the presence of nuanced language, cultural variations, and the emergence of new slang. In order to prevent excessive censorship or prejudice, platforms must strike a balance between automated and human inspection.

Transparency is an important factor as well. Understanding the reasons behind the removal or flagging of material is beneficial for users, and platforms are increasingly giving explanations, appeals mechanisms, and moderation reports in order to increase trust and accountability.

The Prospects for the Future of AI Moderation

In the future, it is anticipated that AI moderation would advance in sophistication, resulting in enhanced accuracy, contextual comprehension, and the ability to respond in real time. Multi-modal artificial intelligence, which is able to assess text, voice, and pictures in conjunction with one another, is developing into a potent instrument for identifying complicated infractions.

It is quite probable that platforms will continue to integrate artificial intelligence with human supervision, guaranteeing that moderation is both effective and equitable. Furthermore, the deployment of moderation systems in various groups and geographical locations will be influenced by continuing research that is focused on reducing prejudice and implementing ethical artificial intelligence techniques.

The use of artificial intelligence moderation techniques is changing the way that social media platforms uphold safety and enforce community standards. These solutions aid in the protection of users, the encouragement of positive participation, and the guarantee of safer online environments by automating the identification of hazardous information while also supplementing human monitoring. With the further development of artificial intelligence, the moderation that both users and producers will experience in the future is expected to be more efficient, more responsive, and more sensitive of the context.