Which platforms are the most effective at enforcing hate speech policies found online in the year 2025?

Which platforms are the most effective at enforcing hate speech policies found online in the year 2025?

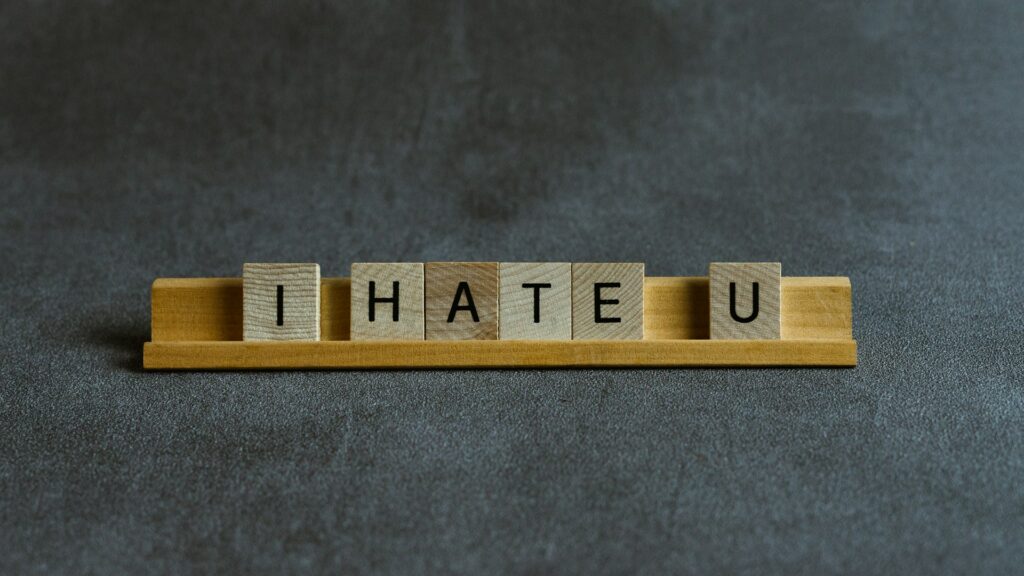

One of the most significant difficulties that social media platforms continue to face is the presence of hate speech. If hate speech, harassment, and extremist ideology are allowed to flourish unchecked inside online groups, they have the potential to become poisonous environments. However, online communities may also support free expression. By the year 2025, platforms are required to show that they are not just posting robust rules against hate speech but also executing these policies in a consistent and transparent manner. This scrutiny is greater than it has ever been.

In this article, we investigate the ways in which key platforms are now dealing with hate speech on the internet, the techniques that are proving to be effective, the areas in which there are still gaps, and the platforms that seem to be setting the bar for enforcement.

Why Policies Regarding Hate Speech Are More Important Than They Have Ever Been

Politics, culture, and everyday life are all impacted by social media, which has become the digital equivalent of the public square. In the absence of effective moderation is:

- There is a threat of harassment and silence for marginalized populations.

- There is no attempt to stop the spread of extremist narratives.

- Advertisers and brands are hesitant to put their money into platforms that are seen to be risky.

The response from governments has been to implement more stringent rules, while users have demanded accountability. When it comes to platforms, vigorous enforcement of hate speech has switched from being an ethical obligation to being a survival strategy for preserving trust and development.

What Each Platform Considers to Be Hate Speech

There is a persistent problem with the fact that there is no universally accepted definition of hate speech. On the other hand, the majority of platforms forbid:

- Direct assaults against people or groups on the basis of their citizenship, race, religion, gender, sexual orientation, handicap, or other characteristics.

- Content that encourages violence or other forms of exclusion.

- Campaigns of mistreatment and harassment that are organized.

- On the other hand, enforcement may vary greatly based on the moderating tools, resources, and desire of a platform to take action against powerful accounts.

The Platforms That Are Leading the Pack

1. TikTok

In addition to a sizable human review staff, TikTok has made significant investments in artificial intelligence-driven moderation solutions. In a short amount of time, it eliminates hateful information that has been detected and bans repeat offenders. TikTok is lauded in the year 2025 for its open and transparent moderation reports, which include information on the amount of appeals, enforcement figures, and community effect.

2. Meta: Instagram

The social media platform Instagram has added proactive detection techniques that examine videos, comments, and captions before they are made public. Users are given the ability to protect themselves from abuse via the use of features such as comment filtering and “hidden replies.” Additionally, Meta has implemented enforcement that is particular to regions, recognizing that there are cultural variances in the ways that hate speech shows itself.

3. YouTube

Especially with its more stringent requirements for video providers to follow in order to monetize their work, YouTube has made significant progress. Reducing the financial incentives for content makers who disseminate harmful ideas is accomplished by the rapid demonetization of material that is borderline extreme or contains hate speech. A modification has also been made on its recommendation system in order to restrict the amplification of films of this kind.

4. Continued Struggles for Platforms X (formerly known as Twitter)

X has been subjected to criticism for uneven enforcement, despite the fact that it has made pledges on “freedom of speech, not freedom of reach.” Following the reduction in the number of moderation staff, there has been an increase in the amount of material that contains hate speech. Critics believe that enforcement seems to be selective, especially with regard to high-profile accounts.

5. On Facebook

Despite the fact that Facebook has robust regulations on paper, the company’s enormous user base makes it difficult to implement such policies on a large scale. Hate speech often reappears in private groups and live broadcasts, which are locations in which it is more difficult to implement moderation in a timely manner.

Platforms Less Extensive

The positions taken by new platforms such as Truth Social, Gab, and Mastodon are quite diverse from one another. Some people purposefully accept more lax moderation under the guise of free expression, which might attract groups that are oriented on extreme ideology. The result is the creation of echo chambers, which allow hate speech to flourish unchecked.

Instruments and Methods That Are Making a Difference

- In order to combine speed with contextual judgment, AI Moderation and Human Review are being used.

- Trust is built by the public release of enforcement data, as reported in transparency reports.

- Filters, blocklists, and reporting features are examples of user empowerment tools that can restrict exposure to potentially hazardous information.

- Pressure from Advertisers: Platforms that do not take action against hate speech are often subjected to boycotts, which forces them to adopt more stringent standards.

Distinctions in the Enforcement of Regional Laws

As a result of new digital rules in the European Union, platforms are required to record the percentage of hate speech that has been removed, and those who fail to comply risk penalties. While this is going on, the United States of America, which has more robust safeguards for free speech, gives platforms greater leeway in terms of enforcement, but it is also coming under increasing criticism from civil rights organizations and advertisers.

Several nations in Asia, including India and Indonesia, have been demanding that hateful information be removed more quickly. This has occasionally sparked discussions over whether or not governments are also suppressing dissent under the pretense of regulating hate speech.

To what extent does the platform enforce?

As of the year 2025, it would seem that TikTok, Instagram, and YouTube set the standard for the policing of hate speech owing to the proactive systems, open reporting, and explicit standards that they have in place. They exhibit demonstrable improvement, despite the fact that they are not flawless. Some smaller networks, such as X, continue to fall behind, and they often place a higher priority on user growth or arguments on free speech than they do on strictly enforcing the rules.

- The Path That Lies Ahead

- The battle against hate speech on the internet will continue to develop as the following:

- The ability of artificial intelligence to discern context, sarcasm, and coded language is improving.

- The use of community reporting tools will increase the amount of user interaction.

- Platforms are being pushed toward consistent enforcement as a result of international rules.

In the end, the platforms that strike a balance between user safety and freedom of speech, while also being clear about their efforts, will be the ones that garner the greatest confidence from both users and regulators.

In 2025, the question of whether or not hate speech should be taken from the internet is not the focus of the argument; rather, it is the question of how successfully platforms enforce their standards. Strong enforcement of hate speech helps populations that are vulnerable, ensures that public conversation is healthy, and prevents advertisers from abandoning the cause. These platforms are demonstrating that it is feasible to respect the right to free expression while also taking measures to actively limit the spread of harmful information. It is possible that those that fall behind may eventually face not just anger from the public but also more stringent involvement from the government.